Articles

Fact-checking

Decoding information disorder on events in Israel/Palestine

Rachel Bessette

Nov 9, 2023

On October 7th, Hamas launched a surprise attack on Israeli territory which resulted in the death of 1,400 Israelis and the kidnapping of around 240 people. The unprecedented bombardment and ground invasion of the Gaza strip that followed has now claimed over 10,000 Palestinian lives in Gaza. This latest resurgence in violence has captivated and polarised audiences around the world. An explosion of online content has meant that the battle is being waged not only on the ground but across the digital sphere.

As a platform that aims to help media consumers in the MENA region and beyond navigate information disorder, renewed violent conflict between Israel and Gaza has presented a unique challenge for Dalil. Because of the inherent dynamism and complexity of armed conflict, the likelihood of misinformation is higher. Paired with conflict parties and supporters deliberately seeking to control and manipulate online narratives through disinformation and propaganda, separating truth from fiction and bluster becomes a constant effort.

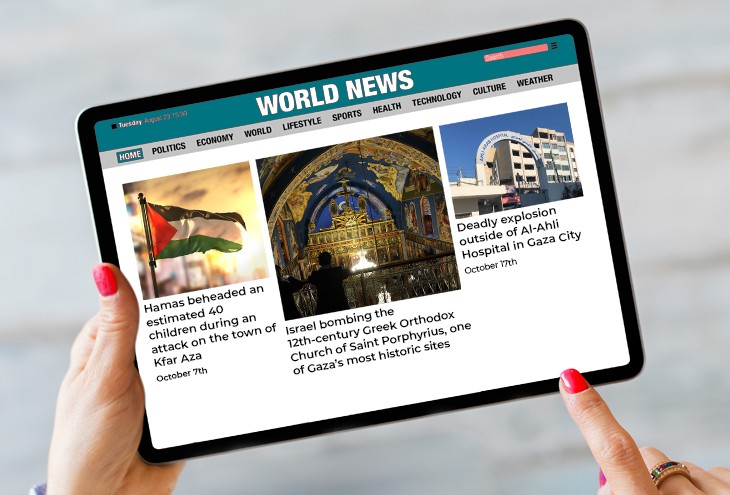

The accusation that Hamas beheaded an estimated 40 children during an attack on the town of Kfar Aza on October 7th is among the most notable false claims of the conflict to date. Initially reported by i24News based on claims by Israeli reservists in the area, the narrative was picked up and promoted by the Israeli military and Prime Minister Benjamin Netanyahu. It was even repeated by US President Joe Biden before a retraction was issued the following day. At the same time, the claim went viral across major international news outlets, including BBC, CNN, CBS, Sky News, Metro News, Fox News, and Business Insider. No Israeli official ever confirmed the claim and or presented evidence of its veracity.

Other instances of blatantly false information during the conflict include a TikTok video allegedly showing Israeli children kidnapped by Hamas being held in cages. While the video was subsequently removed by the owner, who stated that it showed his own children and pre-dated the conflict, it had already been widely circulated. Another video circulated on X meanwhile claimed to show Israel bombing the 12th-century Greek Orthodox Church of Saint Porphyrius, one of Gaza’s most historic sites. The church later denied the claim in a statement.

Circumstances where the truth is unclear have also allowed misinformation to spread. Take for example a deadly explosion outside of Al-Ahli Hospital in Gaza City on October 17th. Despite multiple investigations, including by the BBC, Belling Cat, Forensic Architecture alongside Al Haq (via Twitter), Al Jazeera, Associated Press and Al Arabiya, it is still unclear whether the explosion was caused by a malfunctioning Palestinian rocket or ongoing Israeli bombardment in the area of the hospital. However, both sides have used the incident to support their narrative regarding the opposing party.

Israel and Hamas have also used varying levels of propaganda and information warfare to control the narrative in the conflict. Israeli bot-monitoring firm Cybara has reportedly found 40,000 fake accounts pushing pro-Hamas content and the Israeli prosecutors office has submitted over 4,000 requests to remove content on social media alleged to incite violence associated with Hamas. For its part, the Israeli Ministry of Foreign Affairs has released over 75 videos and ads on YouTube and X targeting residents of EU countries. At times featuring graphic content, ads seek to push a narrative of Hamas as brutal and savage while fostering sympathy for Israel. With Gaza now in a near total network blackout due to damage to telecommunications infrastructure as well as strong online censorship of content seen as sympathetic to Hamas, experts say it is difficult to imagine pro-Palestinian groups competing equally with Israel on the social media battlefield.

Indeed, social media platforms are hardly a level playing field or neutral space. Since the start of the conflict, social media platforms, particularly X (formerly Twitter), have stoked dis- and misinformation or allowed it to continue unabated at worst and at best have unevenly controlled messaging around the conflict. A recent review by POLITICO showed a high prevalence of violent content on X following the October 7th attacks, including a large number of videos allegedly showing Hamas members killing civilians and Israeli soldiers. The proliferation of violent content on the platform was so extreme that the EU launched an investigation of X over its failure to comply with the EU Digital Services Act by allowing the spread of graphic illegal content and disinformation on the platform. Such accusations are supported by research from Amnesty International, which demonstrates that social media algorithms often “disproportionately amplify inflammatory content” as a means of driving up engagement.

Attempts to regulate the spread of hate speech and violent content on social media platforms, meanwhile, have been far from fair. An independent report commissioned by Meta following the last spate of Israeli attacks on the Gaza Strip in May 2021 showed a strong disparity in the policing of Arabic language content versus that in Hebrew, stating that, “Arabic content had greater over-enforcement (e.g., erroneously removing Palestinian voices) on a per user basis…[and] proactive detection rates of potentially violating Arabic content were significantly higher than proactive detection rates of potentially violating Hebrew content.” Not only is Arabic language content subject to an ill-fitting algorithmic screening, the platform also uses an AI “hostile speech classifier,” for which there is notably no Hebrew equivalent.

Despite vague commitments by Meta to abide by the report’s recommendations, there have been repeated instances of flagrant bias in the most recent outbreak of conflict. For instance, Meta recently reduced the ‘threshold of certainty’ for hiding hostile content down to 25 percent (from 80) for content originating in most Middle Eastern countries. The company also recently issued an apology for mistakenly adding the word terrorist when translating Instagram profiles with the words “Palestinian” and “Alhamdulilah”. Palestinian and Middle Eastern social media users are feeling the effects of biased content moderation, frequently citing instances of ‘shadow banning’ for posts related to events in Palestine. Shadow banning, a phenomenon repeatedly documented by activists and media watch dogs, involves reducing the reach of content such that it is seen by fewer users. Despite repeated documentation of this practice, Meta has repeatedly denied accusations.

Confronted with an online space characterised by uneven content moderation that privileges hate speech and violence, and allows unchecked bias and propaganda, accessing reliable information about the conflict is a minefield for the ordinary media consumer. At Dalil, this is where we have sought to focus our attention. By supporting MENA fact-checking organisations with a custom built, AI-driven Arabic language platform, we are helping automate manual, time-consuming content verification processes to increase the speed, scale and impact of fact-checking in the region. Free tools such as Dalil Check, a discourse analysis tool that detects subjectivity and rhetorical devices commonly used to mislead people, are also available to public users seeking support in separating fact from fiction.

Amid current trends of deflection and inaction on the part of tech companies to fairly regulate online content, it becomes the prerogative of ordinary citizens to sort the signal from the noise. While insufficient, tools like those offered by Dalil are an essential life raft in helping media consumers wade through information disorder.